Before I became a naturalized U.S. citizen a few years ago, I was routinely flagged at the airport whenever I returned from overseas. The process was simple in theory: a facial recognition machine scanned my face and printed a slip with either a checkmark for “cleared” or a big X for further inspection. Every single time, I got the X. I’d then be escorted to a secondary screening area, often alongside others who, like me, had darker skin. Sometimes, I’d be held there for over an hour or two.

Later, when I started working in the AI industry, I realized what was likely happening behind the scenes: these facial recognition systems were trained on data that associated brown and black faces with higher risk—so people like me were consistently flagged. At its core, the dataset wasn’t diverse enough; it perpetuated harmful stereotypes by effectively “teaching” the AI to see darker skin as suspicious. As a result, the technology ended up racially profiling travelers at airports.

This underscores a critical point: AI systems learn from the data we feed them. If that data is skewed—labeling certain features as suspicious—or if it simply isn’t diverse enough, the software will amplify those biases across entire populations. In essence, what goes in determines what comes out.

AI as Our Collective Selfie

Artificial Intelligence, despite sounding futuristic, isn’t created in a vacuum. We feed it data—our data. It reflects the habits, quirks, and yes, prejudices we’ve displayed in the real world. Think of an AI system like a child soaking up knowledge from its parents: if the parents keep saying “fuck,” guess what the child picks up?

On a practical level, these systems often rely on machine learning algorithms—techniques that spot patterns in large datasets. That can be powerful when the dataset fairly represents everyone. Imagine an AI-based hospital triage system that uses patient data to quickly identify critical cases, ensuring each person gets timely care. This can address the limitations of older, purely manual processes. But if the algorithms are trained on biased or incomplete data—or if they aren’t monitored for fairness—the same technology can magnify harmful prejudices.

Sometimes real-world data isn’t diverse enough or has major gaps. In that case, developers sometimes turn to synthetic data—artificially generated examples that help fill those holes and train models to be more ethical and holistic. The idea is to expose the system to diverse sets of data (e.g., various skin tones, age groups, body shapes, different weather patterns, diverse flight routes, etc.) so it can perform effectively across many contexts—whether it’s guiding patient care, managing air traffic, forecasting weather, or predicting product demand. Of course, synthetic data itself must be carefully designed and tested with algorithmic fairness in mind; otherwise, it can reinforce the same blind spots. Nonetheless, when done responsibly, it’s an invaluable tool for building AI systems that are more robust and inclusive.

Ultimately, a good AI system—whether it relies on real-world or synthetic data—requires inclusivity, diversity, and equity in its underlying datasets.

That diversity isn’t just about personal attributes—it’s also about capturing the commercial, environmental, and operational factors a system might face. By broadening what we feed into our algorithms, we foster technology that genuinely reflects the reality of the world we live in.

This is exactly where DEI initiatives come in: they are structured efforts to ensure that many different voices and experiences are included—so that the data and decisions reflect everyone’s reality, not just a privileged few. But how does DEI fit in here, and where did it come from in the first place? Let’s briefly examine how these initiatives arose—and why they matter not only in social or legal frameworks but also in the evolving landscape of AI.

A Quick Look Back: Why We Even Have DEI

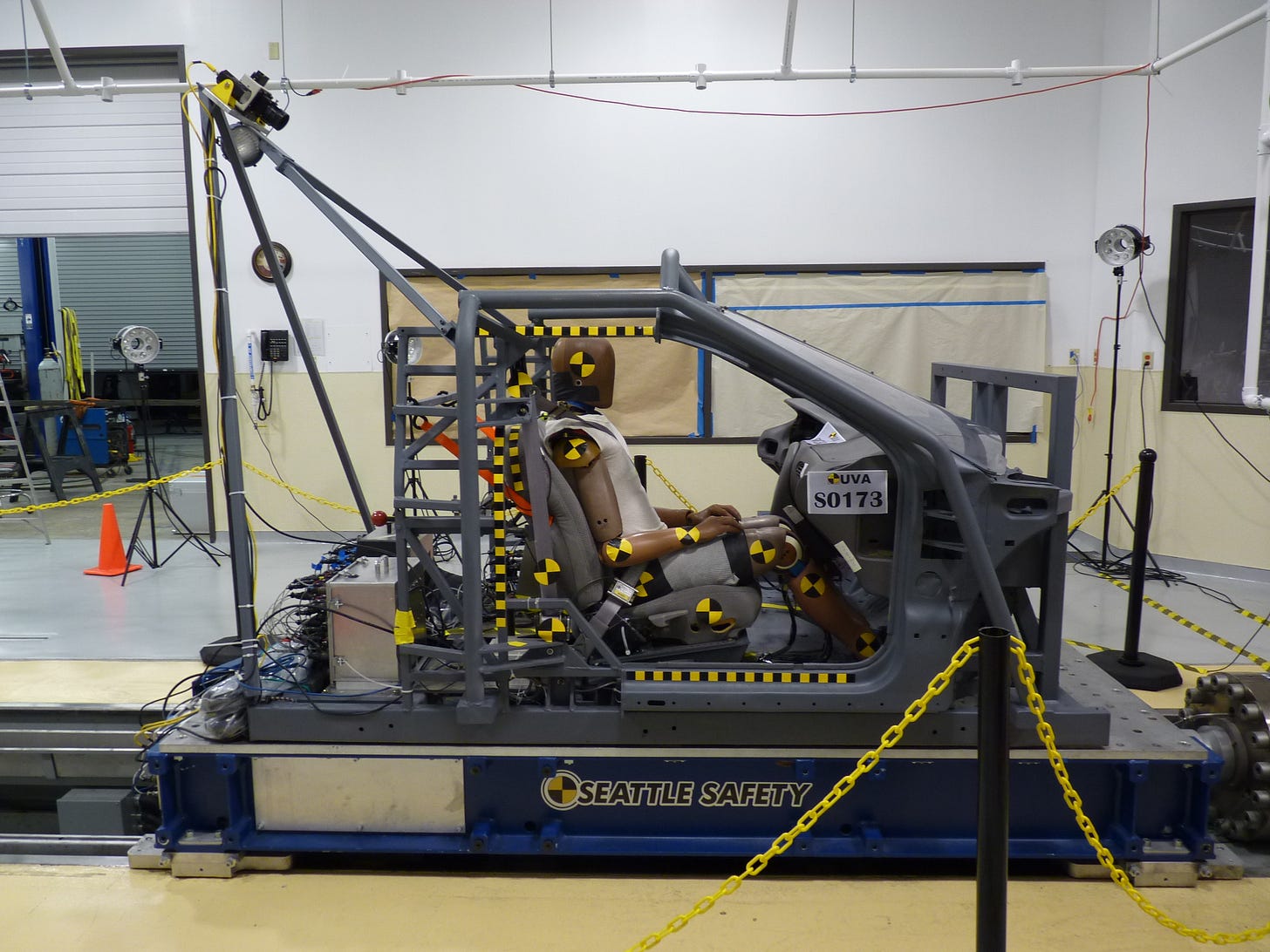

Diversity, Equity, and Inclusion (DEI) initiatives weren’t invented just because companies felt like being nice—they were corrective measures for unfair systems. Take crash test dummies, for example. For years, they were modeled on a single, average male body, which meant women faced a higher risk of serious injury in car accidents—an avoidable flaw that cost real lives. Over time, advocates pressured carmakers to add female-bodied crash dummies, leading to more equitable safety improvements. In effect, this was a deliberate act of inclusion.

That’s the core of DEI: it closes gaps, ensures fair representation, and—frankly—often makes life safer. The same principle is now guiding AI development: if we test systems for bias at the start and include diverse data, AI can solve big problems. If we don’t, we end up with hiring tools or security checks that exclude or profile people simply because they look like the “wrong” demographic in a flawed dataset, a healthcare algorithm that overlooks symptoms in older patients or people with disabilities because it never “learned” to recognize them, or a loan-approval system that flags entire neighborhoods as risky based on historically biased data—locking people out of financial opportunities they deserve.

Think of AI as an evolving organism—like our own communities—that needs a broad, inclusive foundation to thrive.

Just as human societies grow stronger by embracing diversity, AI systems grow more reliable and fair when they are trained on data reflecting different real-world perspectives. Without that diversity, both people and machines can end up reinforcing the same narrow viewpoints—often to everyone’s detriment or god forbid, demise.

Trump and the Rolling Back of Diversity, Equity, and Inclusion

Recently, there’s been a push—spearheaded by the Trump administration’s directives—to dismantle many federal DEI efforts. Some organizations followed suit, slashing or even eliminating their diversity offices and training programs. On the surface, this might look like an attempt to cut red tape or reduce costs. For some in majority groups, DEI can feel ‘unfair’—like a threat to opportunities they have come to expect. It’s understandable to be uneasy about changes that shift the balance. However, DEI isn’t about punishing or replacing anyone; it’s about correcting historical imbalances so that everyone can thrive. But if you consider why DEI structures were introduced in the first place, the danger becomes clear.

We put these programs in place to counteract biases that cause real harm. Removing them might save a few dollars today, but it risks unraveling the progress we’ve made—progress ensuring that people who look, speak, or identify differently aren’t left out. And if AI learns from data that’s increasingly regressive or exclusionary, it will compound those biases, reflecting them in our workplaces, universities, and government programs.

The Complexity of Agentic AI

What happens when AI doesn’t just help us but makes decisions on its own? We call this agentic AI—software capable of acting with minimal human input. It magnifies the risks we’ve discussed because if it picks up biased data or instructions, it can spread those biases across a vast range of decisions. Some agentic systems rely on advanced techniques like reinforcement learning or deep neural networks, which can be tough to interpret—making hidden biases even harder to spot.

That’s where a diverse, equity-focused environment is crucial: we need people with varied perspectives, and the organizational will to question assumptions, spot bias in code and raise red flags about suspicious outcomes. Rolling back DEI—whether at a federal or institutional level—doesn’t necessarily mean a “DEI team” was personally reviewing AI systems; rather, it often signals a broader shift away from inclusive hiring, training, and oversight practices. If leadership no longer prioritizes fairness and representation, the impetus and resources for detecting bias diminishes.

Over time, that can trigger a harmful cycle: agentic AI makes biased decisions based on flawed real-world data, those decisions feed into new training data, and future iterations of the system become even more skewed - increasingly becoming unfair and even dangerous.

What It Means for All of Us

This isn’t just about politics; it’s about inclusion vs. exclusion, innovation vs. stagnation, and safety vs. risk. Historically, societies have progressed the most when they tap into everyone’s talents and perspectives—whether in medicine, engineering, the arts, or business. This is especially true for AI, which relies on balanced, diverse datasets—sometimes supported by carefully crafted synthetic data—to function effectively across all populations.

We must remember that Artificial Intelligence isn’t just technology; it’s socio-technical by nature. There are clear parallels between human societies and AI systems: they mimic human thought processes, learn from human behavior, and in the not-too-distant future, they might even perceive themselves as sentient.

Ultimately, AI is a mirror—it doesn’t invent biases from thin air; it amplifies the ones already present in our data and culture.

By dismantling DEI and similar frameworks, we risk allowing prejudice to run unchecked—not only socially and politically but also technologically. AI systems are now deeply woven into our infrastructure, shaping decisions in healthcare, finance, and beyond.

The moral of the story? DEI isn’t simply about fairness or the lack of it; it’s about building systems - whether social, political, or technological - that truly serve all of us. It’s about recognizing that ignoring diverse perspectives in data and development can harm our collective good. And it’s about understanding that our equity policies don’t just shape office culture or public discourse; they directly influence how algorithms and automated decisions will steer our shared future—or potentially accelerate our societal collapse.

References

Bipartisan House Task Force Report on Artificial Intelligence - 118th Congress

The Guardian. Yes, women are in car accidents too. (Mar 31, 2024)

The Perpetual Line-Up: Unregulated Police Face Recognition in America (Georgetown Law Center on Privacy & Technology)

Harvard Business Review: What is Agentic AI, and How will it Change Work?

civilrights.org. Trump’s Executive Orders on Diversity, Equity, and Inclusion, Explained

MIT Technology Review. Inspecting Algorithms for Bias

The Verge. Amazon reportedly scraps internal AI recruiting tool that was biased against women

Unesco. Ethics of Artificial Intelligence.

UC Berkley. The Next Big Thing: Agentic AI’s Opportunities and Risks.